dapit

dapit

death_star% cat /etc/fstab

# Device Mountpoint FStype Options Dump Pass#

/dev/ada1a / ufs rw 1 1

/dev/ada0a /var ufs rw 2 2

/dev/ada0b none swap sw 0 0

/dev/ada0d /home ufs rw 2 2

proc /proc procfs rw 0 0

./pascal.sh

./pascal.sh

mh okay it seems that my bareos server is running

however on my linux client the bareos client now fails to start

Can't open PID file /var/lib/bareos/bareos-fd.9102.pid (yet?) after start: No such file or directory

does someone know how i can resolve this issue?

./pascal.sh

./pascal.sh

this pid file is actually present

and its owned by user root and group bareos

4 -rw-r----- 1 root bareos 5 Jun 30 12:50 /var/lib/bareos/bareos-fd.9102.pid

Anonymous

Anonymous

This won't work. I think zfs will not work with two different drives

Take any boot cd and wipe your disks (= removing all partition tables). Start from scratch and re-create your disk layout as you have shown above with freebsd installer. Install. You should be able to boot then. All you can configure within the installer is valid for zfs. Indeed: UFS is faster than zfs, but not so robust. Another important hint: during my zfs-installations I NEVER created an fstab-file - neither automatically, nor manually. Once a zfs pool is created and you have installed into it, the system automatically knows what to do with it. You can export/import data-pools. Root-on-zfs-pools "are just there to be used" during boot-time - no manual adjustments whatsoever, no fstab-editing needed!

dapit

dapit

Take any boot cd and wipe your disks (= removing all partition tables). Start from scratch and re-create your disk layout as you have shown above with freebsd installer. Install. You should be able to boot then. All you can configure within the installer is valid for zfs. Indeed: UFS is faster than zfs, but not so robust. Another important hint: during my zfs-installations I NEVER created an fstab-file - neither automatically, nor manually. Once a zfs pool is created and you have installed into it, the system automatically knows what to do with it. You can export/import data-pools. Root-on-zfs-pools "are just there to be used" during boot-time - no manual adjustments whatsoever, no fstab-editing needed!

Thank you, I will do it again once my bigger SSD comes, eventho it'll be able to use all the drive, I will try to put /home on different drive. I have to read some more about ZFS and it pools, I understand the concept and the feature, but don't know how to manage it

dapit

dapit

I am my help desk man 😭😭😭😭😭😭

but this is really frusttrating

That's our life yes? We don't choose the easy way 🤪

dapit

dapit

Take any boot cd and wipe your disks (= removing all partition tables). Start from scratch and re-create your disk layout as you have shown above with freebsd installer. Install. You should be able to boot then. All you can configure within the installer is valid for zfs. Indeed: UFS is faster than zfs, but not so robust. Another important hint: during my zfs-installations I NEVER created an fstab-file - neither automatically, nor manually. Once a zfs pool is created and you have installed into it, the system automatically knows what to do with it. You can export/import data-pools. Root-on-zfs-pools "are just there to be used" during boot-time - no manual adjustments whatsoever, no fstab-editing needed!

And also you were right, the data transfer is faster from the outer tracks to the inner (from the Handbook sec 2.6.1

Anonymous

Anonymous

Thank you, I will do it again once my bigger SSD comes, eventho it'll be able to use all the drive, I will try to put /home on different drive. I have to read some more about ZFS and it pools, I understand the concept and the feature, but don't know how to manage it

The effort is definitely worth it. Remember: what you can configure within the installer will be usable. But start with clean disks (no partitions at all or simple partition table with clean ntfs, fat32 or ext4-partitions). You have to make sure that there are no zfs remainings or UEFI boot partitions on your HDDs. Creating 'Stripe'-Sets on any disk size, type and numbers should be no problem at all for zfs. You only need to learn something more about this fs if you plan to create redundant (fail-safe) raidz1/2/3 disk pools OR mirrors with many drives (7+). Then you need to follow special recommendations, in order to avoid future trouble. Before your next attempt you read freebsd-handbook chapters dealing with zfs. Basically you deal with your zfs-machine via 2 command families: "zfs" and "zpool", like "zfs list" or "zpool status".

Anonymous

Anonymous

dapit

dapit

The effort is definitely worth it. Remember: what you can configure within the installer will be usable. But start with clean disks (no partitions at all or simple partition table with clean ntfs, fat32 or ext4-partitions). You have to make sure that there are no zfs remainings or UEFI boot partitions on your HDDs. Creating 'Stripe'-Sets on any disk size, type and numbers should be no problem at all for zfs. You only need to learn something more about this fs if you plan to create redundant (fail-safe) raidz1/2/3 disk pools OR mirrors with many drives (7+). Then you need to follow special recommendations, in order to avoid future trouble. Before your next attempt you read freebsd-handbook chapters dealing with zfs. Basically you deal with your zfs-machine via 2 command families: "zfs" and "zpool", like "zfs list" or "zpool status".

Btw, when I choose automatic ZFS, it took all the 16gb SSD drive and boot normaly. That's why I thought it won't work with 2 drives.

Anonymous

Anonymous

Take the easy approach then. Earlier we already discussed that zfs loves whole drives. Experiment with such an easy setup and grow complexity later.

Anonymous

Anonymous

One may become a world traveller while finding / connecting to 'local' freebsd-helpdesks / user groups these days... 😉 ... that's just a wild guess and aims for surroundings in the backcountry

Anonymous

Anonymous

On the other hand, it may get annoying for a real pro to explain the basics 1000x to 10.000 people...

./pascal.sh

./pascal.sh

On the other hand, it may get annoying for a real pro to explain the basics 1000x to 10.000 people...

do you have experience in backup software on freebsd?

Anonymous

Anonymous

not yet - but their handbook says it should be definitely usable for home-directories (you can even exclude files/folders, AFAICR)

Anonymous

Anonymous

A small example: I synchronize 1300+ epubs in one folder across 2 raspberry pi, 1 linux and 1 freebsd-workstation. With rsync this is already very annoying manual effort. Within syncthing it is almost a point-click-solution

Anonymous

Anonymous

Otherwise, I'm still also looking for the best way to go regarding backups. So far, I used whole machines as cold spare backups. That sounds expensive, but I think it still was/is cheaper than a professional tape library solution.

Anonymous

Anonymous

Meanwhile, I'm convinced it is a very, very hard thing to do, when you gradually want to leave a Windows-world behind and transfer your 'historical' data into a Linux/Unix world. There are no books around dealing with this. At least according to my limited knowledge.

Anonymous

Anonymous

Beyond this, my problem is to synchronize all data from quite a number of external HDDs, old system drives or whole computers into one big pool. My data growth rate always exceeded my purchase capacity for storage monsters (professional gear) 😉 - discipline is hard to keep, especially in the long run...

Anonymous

Anonymous

As I never lost any valuable data my backup strategies were not so bad. On the other hand my sad record for data redundancy a couple of years ago led to a file, which I had in 48 copies across countless archives ... 😞

./pascal.sh

./pascal.sh

Anonymous

Anonymous

then it doesn't synchronize via relay-servers in the internet, but straight from computer to computer at your home

Anonymous

Anonymous

nevertheless: the methodology is very similar to torrents (jigsaw your files into thousands of pieces and glueing them together at the destination) - therefore its a lazy, safe way but surely not the quickest

./pascal.sh

./pascal.sh

Anonymous

Anonymous

Wtf

It does WHAT?

It's a file-chopper, which torrents the pieces over LAN or WAN and re-syncs that mess at the target. A wonderful piece of software. 😉

./pascal.sh

./pascal.sh

It's a file-chopper, which torrents the pieces over LAN or WAN and re-syncs that mess at the target. A wonderful piece of software. 😉

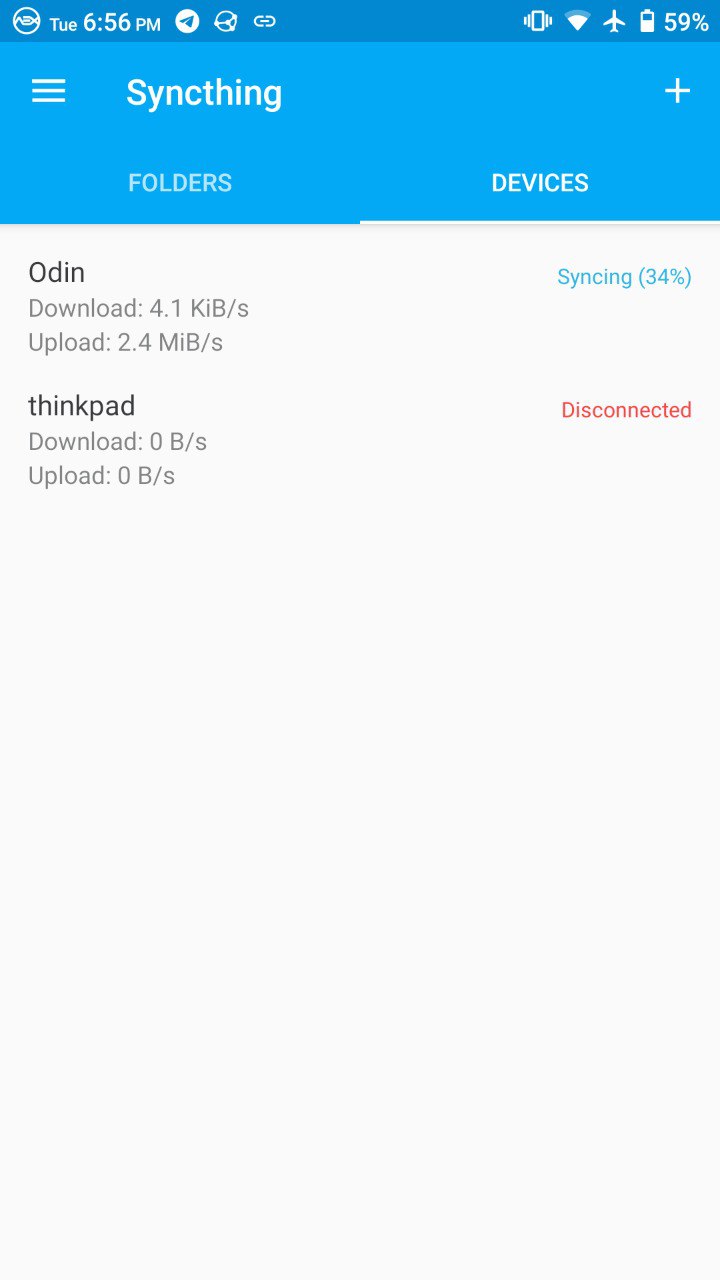

ok i managed to deactivate that

now its only running locally

however its still really slow

im currently synchronizing with

2.06 KiB/s (2.03 MiB) Down

81 B/s (220 MiB) Up

and thats through my local network i suppose

at least i disabled NAT in syncthing

Anonymous

Anonymous

ok i managed to deactivate that

now its only running locally

however its still really slow

im currently synchronizing with

2.06 KiB/s (2.03 MiB) Down

81 B/s (220 MiB) Up

and thats through my local network i suppose

at least i disabled NAT in syncthing

Don't get nervous. It's slow at the beginning. Then it speeds up to full LAN-capacity.

./pascal.sh

./pascal.sh

Anonymous

Anonymous

I saw 60+MB on my LAN, depending on file size (ebooks - remember? Only a couple of them having 50+MB of size, where read/write speed can build up - in contrast to 200kB files)

./pascal.sh

./pascal.sh

./pascal.sh

./pascal.sh

oh wait there was a long time written "preparing for synchronization" though it already synced stuff

now there stands "synchronizing"

ɴꙩᴍᴀᴅ

ɴꙩᴍᴀᴅ

Krond

Krond

Mr.

Mr.