Ares

Ares

Azure Cloud Data Engineer

I am looking for Azure Cloud Data Engineer from Brazil

Key Responsibilities:

Design, develop, and maintain scalable cloud-based data solutions utilizing Microsoft Azure.

Implement robust data models and data warehouses to support business intelligence and analytics needs.

Develop and optimize ETL processes to ensure accurate and efficient data transfer between systems.

Collaborate closely with stakeholders to gather and translate data requirements into comprehensive technical specifications.

Monitor, troubleshoot, and enhance data pipelines to maximize performance and reliability.

Ensure data security and compliance with relevant industry standards and regulations.

Provide expert technical guidance and support for various data-driven projects across the organization.

Qualifications:

Bachelor's degree in Computer Science, Information Technology, or a related discipline.

Proven experience as a Data Engineer with a strong focus on cloud technologies, specifically Microsoft Azure.

In-depth knowledge of data modeling, data warehousing concepts, and industry best practices.

Hands-on experience with Azure Data Factory, Azure SQL Database, and other Azure data services.

Proficiency in data visualization tools and working with complex workbooks.

Preferred experience with Deltek.

Strong programming skills, particularly in SQL and Python.

Excellent problem-solving abilities with a keen attention to detail.

Ability to work effectively in a collaborative team environment and communicate complex technical concepts to both technical and non-technical stakeholders.

Preferred Skills:

Experience with additional cloud platforms such as AWS or Google Cloud.

Knowledge of big data technologies including Hadoop, Spark, or Databricks.

Familiarity with DevOps practices and tools.

Experience with data governance and data quality frameworks.

Please contact me if you are interested in

P

P

Spark interview at bytedance

https://medium.com/me/stats/post/5e54b24e2a18 https://medium.com/me/stats/post/5e54b24e2a18

Sh

Sh

Freelancer

Freelancer

Need data engineer freelancer for job support

Exp- 5+ yrs

Skills - hadoop/Big data technologies,Hadoop eco system(HDFS,Map reduce,Hive,Pig,Impala,Spark,Kafka,Kudu,Solr)

Python/Pyspark

Min 3+ yrs of experience in spark programming

Chowdhury

Chowdhury

I will continue to thank God every day for the help that @CalebMarking offered me through the online investment business. I invested $300 in his legitimate global and profitable trading platform.  Then I easily got $3500 after trading.  I don't believe it will be possible if I decide to invest my small capital.  Right now I am having an unbearable Joy and a sweet heart too, I recommend his platform to you through the company account manager username..

@CalebMarking

K

K

😀 Watch Full Video => Python Dictionary vs Set in Detail | with Methods and Interview Questions | / https://youtu.be/YqIe5Cl_1OI

K

K

Watch in Eng Subs - this Python Video To Clear Advance Function - Watch till end _ https://youtu.be/jQb8KwUb7IY

Code

Code

[Hiring] Hiring a Data Engineer with 4+ years of experience to design, build, and maintain data pipelines and infrastructure, ensuring optimal performance and reliability across systems.

Alexander

Alexander

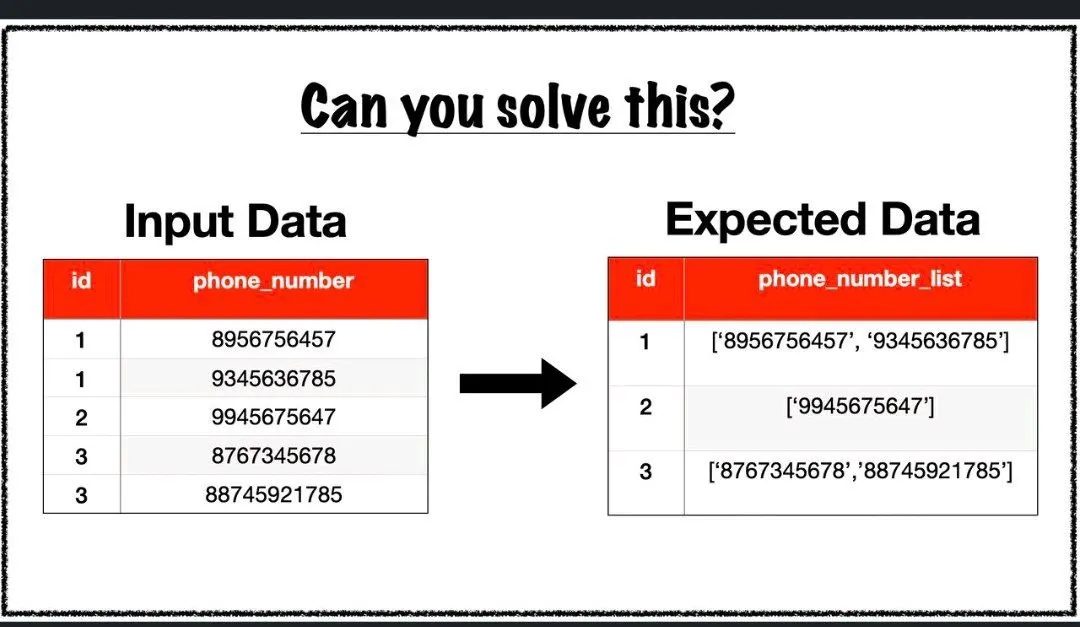

How to achieve in Pyspark

df

.groupby("id")

.agg(collect_list(col("phone_number")).alias("phone_number_list"))

Nayla

Nayla

Hello! Any folks here involved in data modeling? I would to get your insights on modeling pain points. DM me for more details

SunGalaxy

SunGalaxy

I am looking for a Data Engineer with 4+ years of experience to design, build, and maintain data pipelines and infrastructure.

It's Paid Remote Role

praveen

praveen

I am looking for a data engineer who is willing to go hybrid in mclean, va location.

client is looking for engineer with spark / python / scala / AWS experience.

if interested send resume to info@rishsystemsllc.com

meyer

meyer

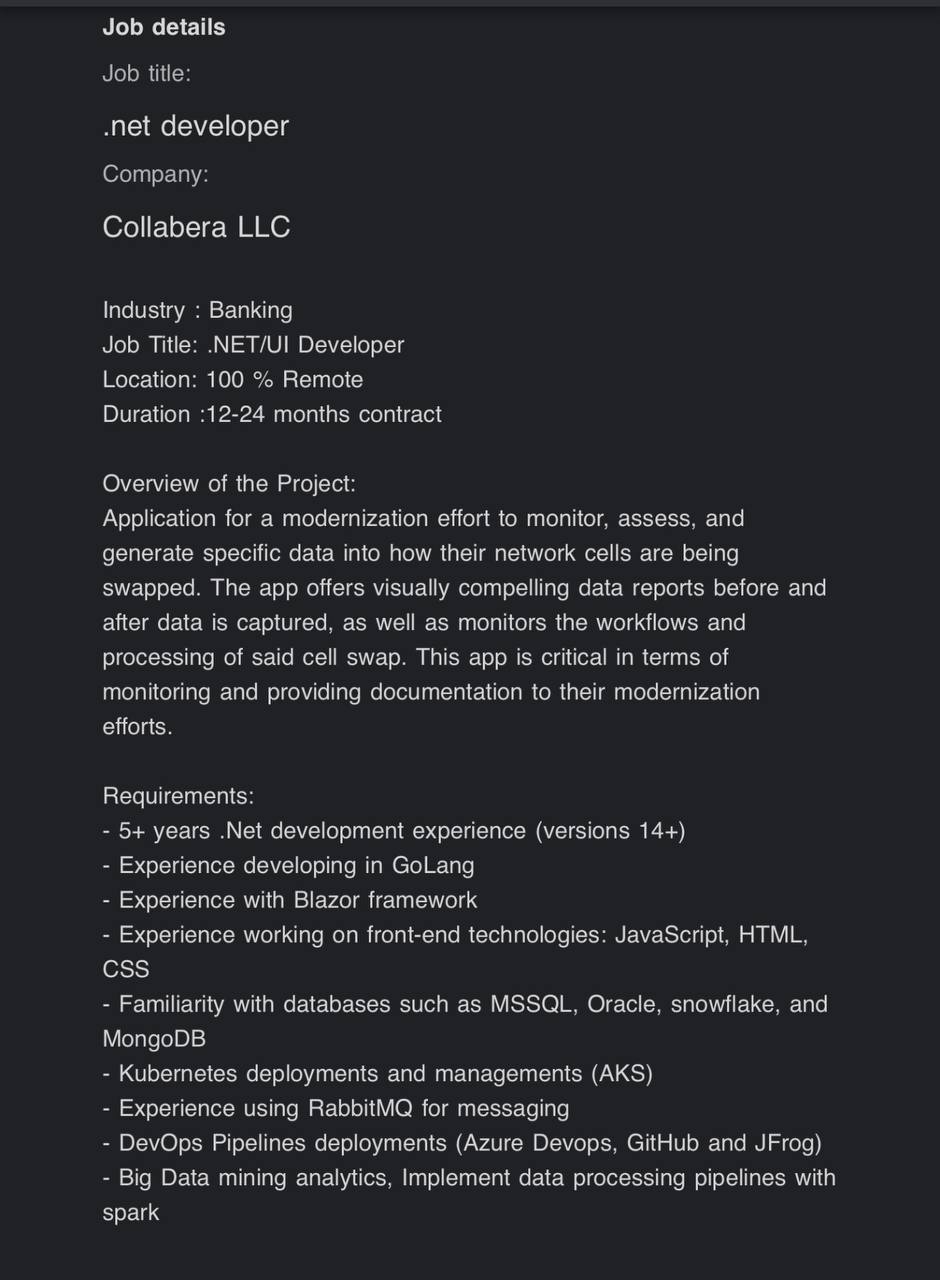

Industry : Banking

Job Title: .NET/UI Developer

Location: 100 % Remote

Duration :12-24 months contract

Overview of the Project:

Application for a modernization effort to monitor, assess, and generate specific data into how their network cells are being swapped. The app offers visually compelling data reports before and after data is captured, as well as monitors the workflows and processing of said cell swap. This app is critical in terms of monitoring and providing documentation to their modernization efforts.

Anonymous

Anonymous

I worked as data engineer at Nike & Dell.

If anyone needs my help, DM is OK.

I can help you

Dk

Dk

Hello, i am looking for job support on below tech stack. Ping me if anyone is interested

: Python, Data experience, AWS (Serverless), SQL, Git, Jenkins .Must Haves:

Need a good mix of web development and data engineering knowledge. Core skills: Python, Data pipelines, AWS(Serverless), SQL, Github and Jenkins.

* Mainly backend role with Python.unit test case writing

Code

Code

Is anyone here looking for a job? Please send me a message on LinkedIn.

https://www.linkedin.com/in/stefan-stasinopoulos-65b87333a/

Techie 009

Techie 009

Job Opportunity: Remote Job Support

We are hiring an expert for remote job support (2-3 hours daily, Mon-Fri, from 9 PM IST onwards).

Requirements:

Experience: 5+ years (overall), 3+ years in GCP and PySpark

Proficiency in BigQuery and Apache Airflow

Strong problem-solving skills are a must.

Please DM for details.

Astha

Astha

🌟 We are HIRING! 🌟

Profile: Data Engineer

Experience:2.5+years

Location: Noida

Work mode: hybrid

Mandatory Skill: Azure Data bricks + Azure Data Engineer

Key Responsibilities:

Data Management: Build, maintain, and optimize data pipelines and databases in Snowflake to support sales operations. Data Integration: Integrate data from various sources, ensuring data quality and consistency. Data Modeling: Develop and maintain data models to support reporting and analytics needs. ETL Processes: Design and implement ETL (Extract, Transform, Load) processes to ensure efficient data ow. Performance Tuning: Optimize database performance and query efficiency. Design and Development: Lead the design, development, and implementation of data pipelines and data warehouses. Troubleshooting: Troubleshoot data pipelines and data warehouses to resolve issues promptly. Stakeholder Communication: Communicate with stakeholders to understand their needs and ensure that projects meet their requirements. Collaboration: Work closely with the Operations Teams in HQ to understand data requirements and deliver solutions that meet business needs. Data Security: Ensure data security and compliance with relevant regulations. Documentation: Create and maintain comprehensive documentation for data processes and systems. Qualifications: Experience: 5+ years of experience in data engineering, with a focus on sales data. Technical Skills: Proficiency in Snowake, SQL, and ETL tools. Analytical Abilities: Strong analytical and problem-solving skills. Communication: Excellent verbal and written communication skills in English. Collaboration: Ability to work collaboratively with cross-functional teams. Attention to Detail: High attention to detail and commitment to data accuracy. Adaptability: Ability to thrive in a fast-paced and dynamic work environment.

If you're ready to make an impact in Data Engineering, we want to hear from you! Also if you know anyone who would be a great fit, please let us know!

You can send your resume at- astha.sisodiya@technokrate.com

#DataEngineer#Snowflake #AWS#Mumbai#Pune#Chennai #Hyderabad#Bangalore#kolkatta #Noida#NCR#urgenthiring#immediatejoiner#ETL#SQL#Pune#django#Flask#GCP#Madhyapradesh#Punjab#Chandigarh#haryana#Maharashtra#delhi#softwareengineer#jobopportunities#remotejobs#fulltime#experience#communicationskills#skills#DataEngineer#DataEngineering#DataJobs#DataScienceJobs

#BigData#TechJobs#DataAnalytics#ETL#CloudDataEngineer#DataPipeline#DataWarehouse#SQLJobs#PythonDeveloper#MachineLearningEngineer#DataEngineeringJobs#AIJobs#DataOps#Hadoop

#SparkJobs#DataArchitect

SunGalaxy

SunGalaxy

[Hiring]

looking for data engineers to work remotely.

📍 Requirements:

Position: Remote (Part-time, 1-3 hours/day)

Roles: Multiple positions available

Age: 23+

English Proficiency: C2+ level required

Equipment: Must have a laptop and phone

Skills: Basic knowledge (no problem if none)

Prove Citizenship: Through verified social media accounts

💼 Salary:

$50 - $2,000/month depending on the role

A great opportunity to increase your income with minimal effort!

please dm me if you are interested.

Scott

Scott

SROY

SROY

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

KA

KA

#Hiring

#Informatica #IDQ #EDC #AXON #Remote

Company Description

The company specializes in implementing Informatica tools for data governance, including Axon, EDC, and IDQ.

Role Description

The role involves leading the end-to-end implementation of Informatica tools for data governance. Responsibilities include user role creation, tool configuration, metadata mapping, data quality rule development, testing, and user training. Proven professional implementation experience is required.

Qualifications

Expertise in Informatica Axon, EDC, and IDQ.

Hands-on experience with metadata management and data quality processes.

Strong background in data governance frameworks.

Proven experience in professional implementations.

Requirements

Check Prerequisite Activities:

Create user accounts and roles in Axon, EDC, and IDQ.

Perform integration sanity checks between tools.

Collect data quality rules from stakeholders.

Implement Informatica EDC:

Catalog data and map technical metadata to business glossary terms.

Establish data lineage and conduct impact analysis for 90 KPIs.

Build a knowledge graph in EDC.

Implement Informatica IDQ:

Profile data and create data quality rules for six KPIs.

Develop a data quality scorecard.

Implement Informatica Axon:

Create a business glossary and define key business terms.

Map users to business domains and configure Axon-EDC-IDQ integration.

Perform Testing and Validation:

Conduct unit, integration, and user acceptance testing.

Validate data governance processes and resolve any issues.

Migrate to Production:

Transfer configurations, glossary entries, and rules to the production environment.

Perform final system checks.

Conduct User Training and Handover:

Train users on Axon, EDC, and IDQ functionalities.

Provide documentation and post-implementation support.

Conduct a final review and obtain sign-off.

Timeline

very tight

send cv to

obiee2000@gmail.com

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Deepak

Deepak

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

It's free so please share the link of drive here

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Alexander

Alexander

Hello, anyone has the pdf of fundamentals of data engineering?

You may use Google search for this task

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

praveen

praveen

Any data engineer profiles? Hybrid role mclean va..

Spark, scala, python, aws

Email resume to info@rishsystemsllc.com

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Laxmi

Laxmi

Ayobamy

Ayobamy

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Nimbus

Nimbus

Azure data engineering full course

Aws data engineering full course

Latest AUGUST BATCH 2024

Data factory, databricks, pyskark, sqlspark, synapse, basic of python

120 hours of content

4 real time end to end projects

20 modules with real time scenarios

Interview preparation kit latest

50 mnc asked interview questions

Dm me

Alexander

Alexander

Sayed

Sayed