Anonymous

Anonymous

BTW why aren't you in the illumos group, then?

Joined the group. Will have to wait, until I'm having freed another 64bit pc for further testing. Thx, anyway... 😊

neb

neb

ZFS, zones, dtrace, more integrated into kernel than anywhere else (at least Open Source world).

Jay

Jay

ZFS, zones, dtrace, more integrated into kernel than anywhere else (at least Open Source world).

How active is the development since opensolaris?

Dog

Dog

Phoronix

OpenBSD Marks 25th Anniversary By Releasing OpenBSD 6.8 With POWER 64-Bit Support

It was in October 1995 that Theo de Raadt began the OpenBSD project as a fork of NetBSD 1.0 following his resignation from the NetBSD core development team. Now twenty-five years later OpenBSD 6.8 has been released for marking the 25th anniversary of this popular BSD distribution...

neb

neb

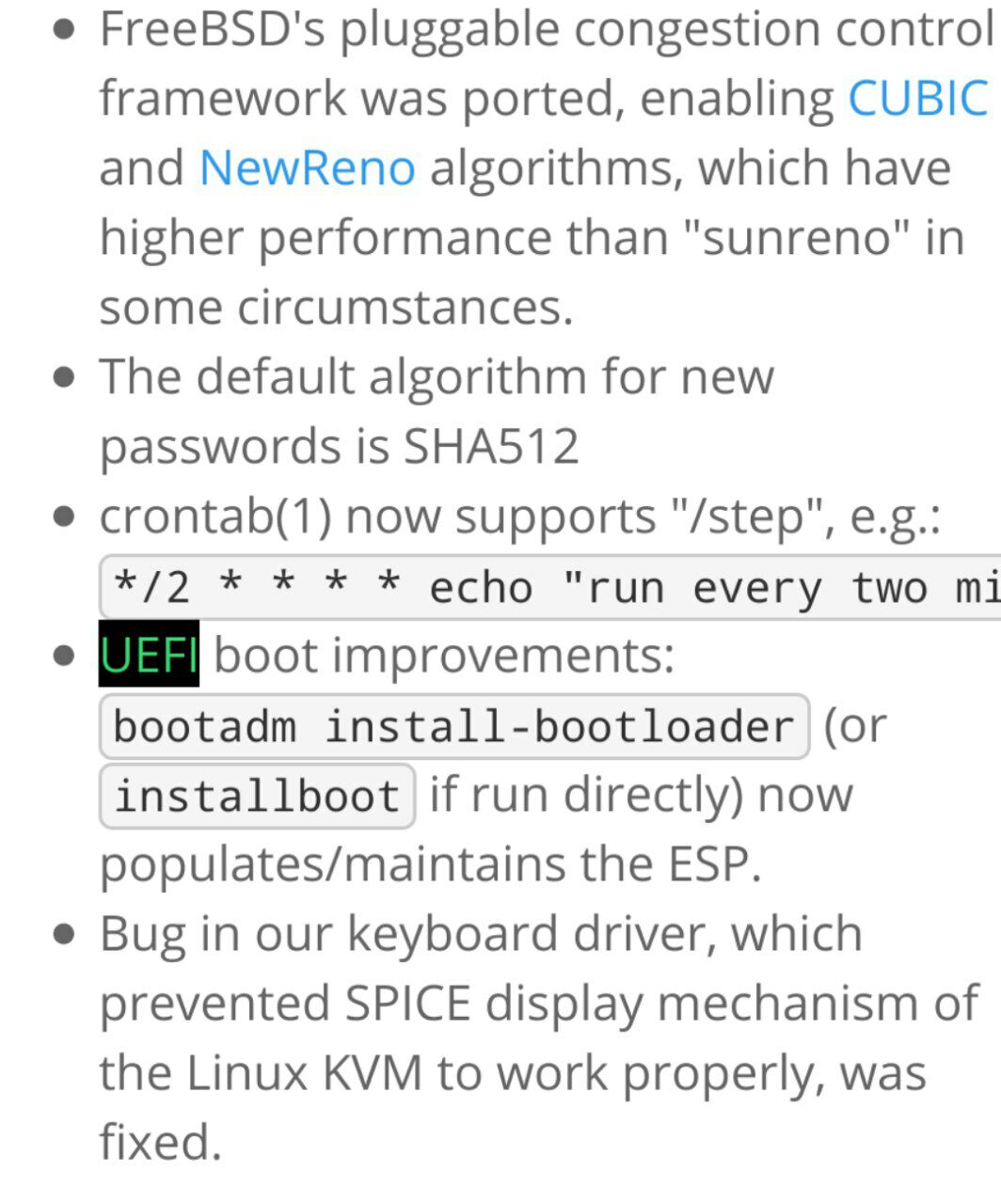

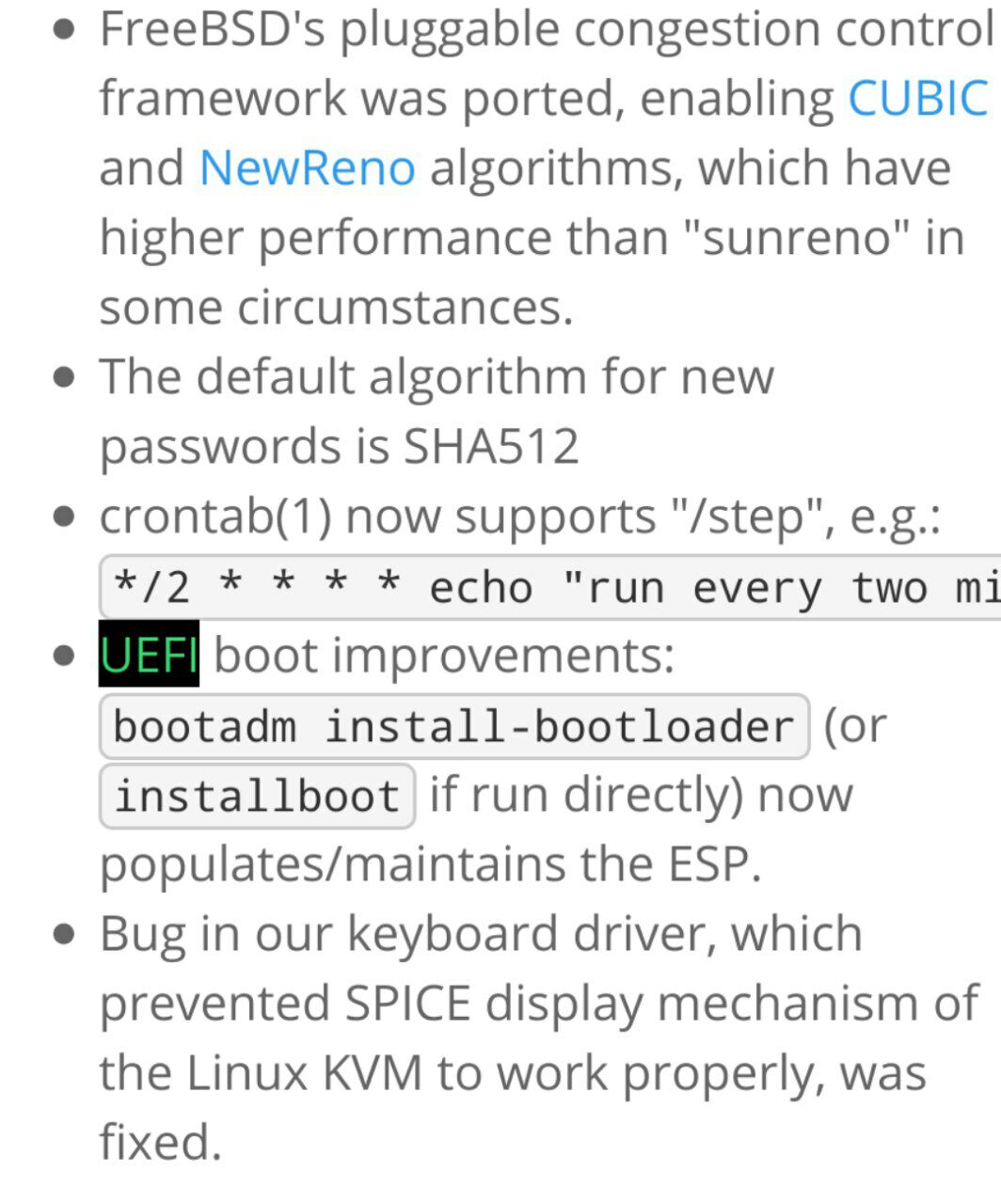

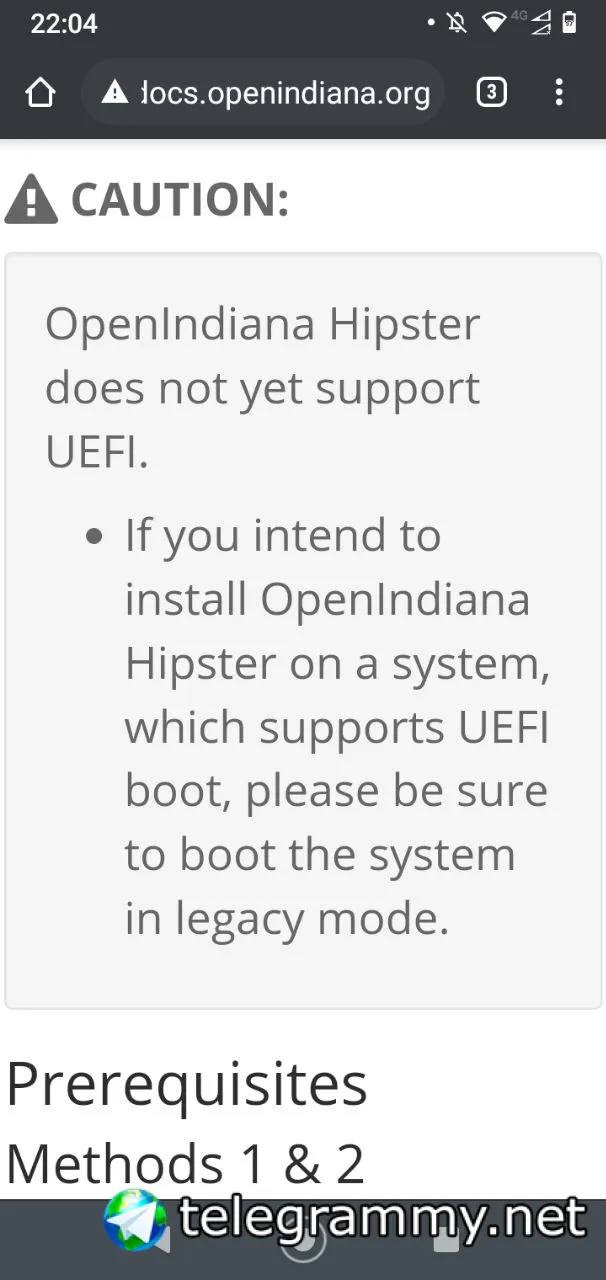

I had read something. Hipster is Openindiana? It hadn't UEFI support, or am I wrong?

aldebaran 🇮🇹

aldebaran 🇮🇹

neb

neb

neb

neb

If somebody wants to enhance it, we have a project for it on Github: https://github.com/OpenIndiana/oi-docs

neb

neb

I had read it here

http://docs.openindiana.org/handbook/getting-started/#installing-openindiana

I need more evidence to know for sure - I'll ask my members to confirm...

neb

neb

@neb_1984 thank you. I know nothing about Illumos

No problem. I find it extremely intriguing. Lesser known high quality projects seem to allure me :)

hereforyou

hereforyou

hereforyou

hereforyou

does anyone know how to clone a zfs (live) system onto a new hard disk? I need to replace my hdd with an ssd ..... most examples I see online are kinda confusing

hereforyou

hereforyou

Make recursive snapshot and send/receive it.

can I do this with my live hdd system with ssd attached? Not sure how to go about it ............ some instructional video online ?

Krond

Krond

1. Create new zpool on ssd.

2. zfs snapshot -R old-pool@move

3. zfs send -R old-pool@move | zfs receive new-pool

hereforyou

hereforyou

Krond

Krond

I guess you also need to understand how ZFS mounts filesystems. First remount new-pool somewhere else in the path so the copied filesystems would not overlap existing ones.

Krond

Krond

No. By default root ZFS fs is set to legacy mount with all other fs set to mount over /. You can check how that works for you with:

zfs list -o name,mountpoint

Krond

Krond

In this case when you try replicating your full fs tree all fs from new zpool will inherit mountpoints from old zpool and will be mounted to the same locations.

Krond

Krond

If you want to mount some zpool without overlapping your system use zpool import -R /other-root new-pool. This way any fs that should be mounted over /var would actually be mounted on /other-root/var.

hereforyou

hereforyou

I'm afraid i'll have to understand the details more before I try these - thanks though for the guidance

Anonymous

Anonymous

Make recursive snapshot and send/receive it.

Having the same task ahead. Is this going to work?

Strategy for creating a replicated server with zfs send/receive

Environment:

2 machines: system_A & system_B

2 IP-adresses: 192.168.1.1 & 192.168.1.2

2 root-on-zfs-pools: zpool_A & zpool_B (zpool_B newer & larger & to be a zfs clone of zpool_A)

Task description:

Replicating a full fs tree with all fs from old zpool_A on system_A to new zpool_B with all inherited mountpoints and mounts to the same locations.

Pre-/post-task command

zfs list -o name,mountpoint

should deliver the same output.

1. install a fresh copy of freebsd on system_B (root-on-zfs on zpool_B create <mainuser>)

2. use shell on system_A as 'root': <ssh mainuser@192.168.1.2>

3. zfs snapshot -R zpool_A@system_A_recursive_snapshot

4. zfs send -R zpool_A@system_A_recursive_snapshot | zfs receive zpool_B

5. get back to system_B: login as 'root'

6. reboot system_B: login as 'mainuser'

Krond

Krond

If both disks are connected to the same host, you also just can zpool attach to create a zpool mirror consisting of two devices. If you need just to switch to the other disk then remove old disk from mirror, or if you just need another host you can disconnect new disk online and remove it afterwards. This way you will get a clone of your zpool, but I guess you wouldn't be able to use attach them both again.

Krond

Krond

In case of receiving snapshots on the running host — I guess that's not possible, It would need to empty all FS on that hosts and this will require removing existing mountpoints.

Anonymous

Anonymous

In case of receiving snapshots on the running host — I guess that's not possible, It would need to empty all FS on that hosts and this will require removing existing mountpoints.

This is exactly, what keeps me confused. When I read zfs-documentation, they say send/receive need to point towards an 'empty fs'. But how could I create any zfs-fs without installing freebsd?

Anonymous

Anonymous

Other sources say: do NOT change drives within a running system unless the disks died abruptly, due to old-age hw-failures. I know procedures to replace HDDs within zfs-mirrors, but this is exactly, what I want to avoid, since I already got a 2nd server.

Anonymous

Anonymous

Baseline recommendation is: change HDDs in a running zfs-system only, when absolutely unavoidable.

Krond

Krond

Well, I guess it's not wise to connect/disconnect them online as there can be issues if your power source can't coup with another drive spinning up or connecting is not natively supported on motherboard. Otherwise if you can poweroff, add disk, powweron - there's no limitations.

Krond

Krond

My home server is currently living over 3 disk zraid, I'm buying cheap refurbished drives for it and replacing them when they start failing.

Krond

Krond

If you definitely need to transfer system over network - take look on rsync, it can make it easy to replicate all files over network.

Anonymous

Anonymous

My home server is currently living over 3 disk zraid, I'm buying cheap refurbished drives for it and replacing them when they start failing.

Good strategy. Should always work with zfs.

Krond

Krond

zfs list -Ho name | xargs -n1 zfs get -H all | awk 'BEGIN{shard="";output=""}{if(shard!=$1 && shard!=""){output="zfs create";for(param in params)output=output" -o "param"="params[param];print output" "shard;delete params;shard=""}}$4~/local/{params[$2]=$3;shard=$1;next}$2~/type/{shard=$1}END{output="zfs create";for(param in params)output=output" -o "param"="params[param];print output" "shard;}'

if you need to extract your current fs structure as a shell script.

Anonymous

Anonymous

Well, you can boot from CD or a flash drive for example...

I'm trying to avoid to setup the zfs-mirror completely by hand, as long as I know you could easily rely on freebsd-installer

Krond

Krond

There was years since I used freebsd-installer. I'm just copying or mounting and making make DESTDIR=/there installkernel installworld.

Anonymous

Anonymous

YT: v=COOAH_-CLws is quite helpful for zfs send/receive for data replication (backup purposes). My understanding of 'replicating' a server is yet a little bit different: it means cloning a root-pool onto a new machine. Couldn't figure out yet how to do this in the most straight-forward way.

Anonymous

Anonymous

Crazy: Release Notes depict 89 bibliographic refs - the majority of them containing "Tanenbaum,A.S."!

Anonymous

Anonymous

https://www.cs.vu.nl/~ast/home/cv.pdf I had the *wrong* impression he was living and teaching in Canada over decades...

Anonymous

Anonymous

If you definitely need to transfer system over network - take look on rsync, it can make it easy to replicate all files over network.

This is most likely the fastest you can go on zfs (at least when using send/receive for backup purposes): https://papers.freebsd.org/2018/bsdcan/grimes-zfs_send_and_receive_performance_issues_and_improvements/

Anonymous

Anonymous

This article explains differences between zfs send/receive and rsync. According to my understanding all depends on data volume and frequency of snapshots, if zfs onboard tools should beat 'rsync': https://arstechnica.com/information-technology/2015/12/rsync-net-zfs-replication-to-the-cloud-is-finally-here-and-its-fast/

Anonymous

Anonymous

Having the same task ahead. Is this going to work?

Strategy for creating a replicated server with zfs send/receive

Environment:

2 machines: system_A & system_B

2 IP-adresses: 192.168.1.1 & 192.168.1.2

2 root-on-zfs-pools: zpool_A & zpool_B (zpool_B newer & larger & to be a zfs clone of zpool_A)

Task description:

Replicating a full fs tree with all fs from old zpool_A on system_A to new zpool_B with all inherited mountpoints and mounts to the same locations.

Pre-/post-task command

zfs list -o name,mountpoint

should deliver the same output.

1. install a fresh copy of freebsd on system_B (root-on-zfs on zpool_B create <mainuser>)

2. use shell on system_A as 'root': <ssh mainuser@192.168.1.2>

3. zfs snapshot -R zpool_A@system_A_recursive_snapshot

4. zfs send -R zpool_A@system_A_recursive_snapshot | zfs receive zpool_B

5. get back to system_B: login as 'root'

6. reboot system_B: login as 'mainuser'

Anyhow. Is anyone willing to develop a step-for-step-solution to this problem together with me? Here, online, in the wide open... 😉?

Anonymous

Anonymous

Let me misinterpret your answer deliberately: 'the place' I had in mind was to put the result into the freebsd handbook (chapter 'zfs administration').

Anonymous

Anonymous

How's your impression about the FreeBSD 12.2 RC3?

on one of my laptop runnin intel legacy card, xwindows doesnt work

mrphyber

mrphyber

How's your impression about the FreeBSD 12.2 RC3?

I'm running it on my laptop and everything is fine. I've had only one problem: drm-fbsd12.0-kmod compiled for fbsd12.1 won't run on fbsd12.2 so I had to recompile it after installing the base 12.2 system

Eliab/Andi

Eliab/Andi

I'm running it on my laptop and everything is fine. I've had only one problem: drm-fbsd12.0-kmod compiled for fbsd12.1 won't run on fbsd12.2 so I had to recompile it after installing the base 12.2 system

ah ok :) but it is still 1 week before final release ;) so some bugs or issues may be fixed asasp

ɴꙩᴍᴀᴅ

ɴꙩᴍᴀᴅ

dapit

dapit