Anonymous

Anonymous

Okay. My initial thought was to create queue of point files that fail and then process through those committing after each entry. That way i was only slowing the process down when duplicates were involved. Is there a better or prefered way to prevent duplicate entries?

Anonymous

Anonymous

I can post my Entity definitions and the function that is doing the work if that is useful information.

Anonymous

Anonymous

class Point(db.Entity):

id = PrimaryKey(int, auto=True)

file_name = Required(str, unique=True)

device_name = Required(str)

point_name = Required(str)

data = Set('PointData')

type = Optional(PointType)

class PointData(db.Entity):

time_data = Required(datetime,6)

value = Required(str)

point = Required(Point)

PrimaryKey(point,time_data)

Anonymous

Anonymous

I've been handling it in memory for the past few months, but I'm looking to move beyond that.

Anonymous

Anonymous

I thought about that, but I wasn't sure about the performance hit. Duplicates are unlikely unless someone tries to load the same file a second time. I thought that I'd be better off just blasting them and then going back through the failures. If the hit isn't bad from exists() though I can do that.

Anonymous

Anonymous

There should only be one CSV file per point during a given month though and that is all that I am processing at a time.

Matthew

Matthew

I think it's likely that exists() would be cheap. If not, you can dedupe in python (maybe a dict with the primary key combo as a key) on a per file basis.

Matthew

Matthew

if PointData.exists(lambda pd: pd.point == my_point and pd.time_data == my_time_data):

# create the PointData

Matthew

Matthew

No problem, I've done similar projects before and I don't remember performance ever being an issue.

Anonymous

Anonymous

I'm currently testing pony 0.7.2 for usage at Dematic

One test seeks to assert that a postgresql and sqlite behave identical for a number of orm.db_session calls. This works perfectly in isolation.

However when I'm trying to switch from one provider to another as a part of a loop, pony bites back with the message that: "'Database object was already bound to %s provider'" (ll 547 in core).

I then looked at the code and found that db.disconnect does not set database.provider = None

(def disconnect(...):... line 599.

Is it me doing something wrong?

Anonymous

Anonymous

The python object "db" was created by a module in global name space. By repacking the python object into a function that instantiates the db as a new object, the problem has been resolved.

Alexander

Alexander

Hi! According to current design, a Database object cannot be "unbinded" from the provider. The disconnect method just closes a DBAPI connection to a database server.

The reason for that behavior is that some properties can be set only after we know specific database server. For example, I may be interested in knowing MyEntity._table_ property which contains a table name for this specific entity. This table name may be different for different databases: MYENTITY in Oracle and myentity in other databases. So, the hypothetical unbind method should also 'reset' all such properties to its initial state, and it may be hard to do.

Currently we suggest to create db object inside a factory function, and make separate instance of db object for each database

Alexander

Alexander

Thanks, please let us know what improvements you'd like to see in pony. This could help us to make it even better ;)

Matthew

Matthew

Hi,

I am getting very bad performance in the view of my web app, I have narrowed it down to code like this:

in for loop:

product = Product.get(lambda p: p.attr1 == x and p.attr2 == y)

using sql_debug, I see it's doing a select query with LIMIT 2, which I suspect is making postgres scan the whole table in every loop, any idea why there's a LIMIT 2?

Matthew

Matthew

product = Product.select(lambda p: p.attr1 == x and p.attr2 == y).limit(1)[😏 or [None])[0] is approx 3x faster than the get, correctly issues a limit 1

Matthew

Matthew

with a correct index, it's a lot faster, but still a 3x difference between limit 1 and limit 2

stsouko

stsouko

The get method assumes that no more than one object satisfies specified criteria. Pony attempts to retrieve more than one object in order to throw exception if multiple objects were found. Sometimes the query condition is wrong and a very big number of objects satisfy the criteria. Pony adds LIMIT 2 in order not to load all that objects to memory.

Matthew

Matthew

Does it take composite_keys into account? That could be an optimisation when you know the database restricts the possible results to be max 1

Matthew

Matthew

Hi, this form should take composite key into account:

Product.get(attr1=x, attr2=y)

I tested this with a composite_key present on attr1 and attr2, it is still LIMIT 2

Alexander

Alexander

Hmm, that's strange. How do you define the composite key? Is it composite_key(attr1, attr2) in entity definition? Remember that composite_index is not sufficient, because composite_index is not unique

Joery

Joery

Roman

Roman

Sorry, I fixed, it but we had hard refactoring and we needed time to test it all. I will ping then it would done(very soon)

Alexey

Alexey

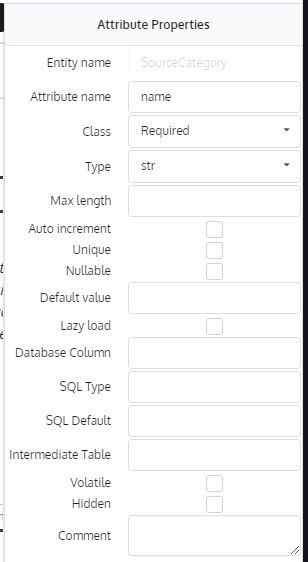

Suggestion: Add an Unsigned checkbox to the editor

Hi Joery,

Where do you suggest adding this?

Alexander

Alexander

I tested this with a composite_key present on attr1 and attr2, it is still LIMIT 2

Hello. I just fixed this bug. Already released on GitHub.

Alexander

Alexander

It's too complex to analyse lambda.

If you know that you are searching by unique key - kwargs is enough.

Alexander

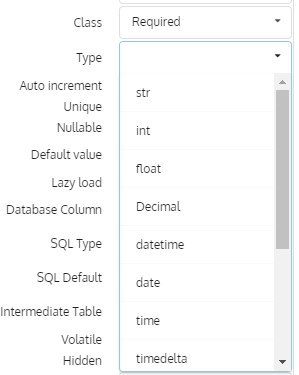

Alexander

In Pony unicode and str mean the same (unicode), for easer porting of application code to Python3. You can use str in models with meaning "unicode"

Joery

Joery

Joery

Joery

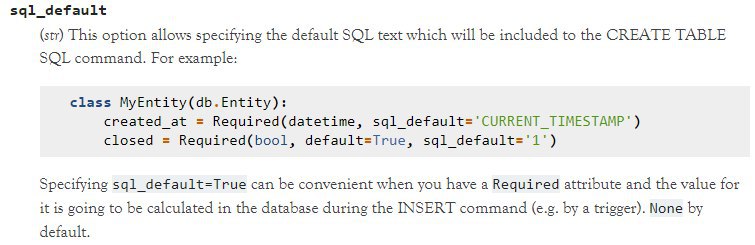

class Example(db.Entity):

test_attribute = Optional(int, size=8, sql_default=0, default=0)

The editor generates the sql_default attribute as an integer, but shouldn't it be a string?

kwerty

kwerty

Hi guys. Do you have best practice or templates for new project which use pony orm ? I mean where I must to store models and where others?

Matthew

Matthew

I start with a single file for a flask + pony project until it gets larger or I need to access the models outside the web app, then I move the models to a models.py

Anonymous

Anonymous

I once made the mistake of using a single models.py file for multiple applications. I needed just a few of the models in each. But when I called db.bind it created all of the models in my database (not just the ones I imported from models.py) and I ended up with clutter. So, don't do what I did :)

Henri

Henri

Is it possible to set a required field in before_insert?

class Article(db.Entity):

slug = Required(str, 200, unique=True)

title = Required(str, 255)

def before_insert(self):

self.slug = self._slugify_url_unique(self.title)