how about now?

And somehow dangerous, could be unexpected if the default differes...

Lucky

Lucky

Alexander

Alexander

Well, the default will be applied to insert only. But if you plqn to create object and to continue work with it in the same transaction, then probably yes

Lucky

Lucky

ApiKey.exists(id=api_key)

(id is type UUID)

raises:

File "/app/src/pony/pony/orm/dbapiprovider.py", line 726, in validate

return UUID(hex=val)

File "/usr/local/lib/python3.5/uuid.py", line 140, in __init__

raise ValueError('badly formed hexadecimal UUID string')

ValueError: badly formed hexadecimal UUID string

Shouldn't it just return False?

Lucky

Lucky

Can pony orm handle Enums in postgres?

https://www.postgresql.org/docs/9.1/static/datatype-enum.html

Micaiah

Micaiah

Can pony orm handle Enums in postgres?

https://www.postgresql.org/docs/9.1/static/datatype-enum.html

Would they use Python enums? Because Python enums are.. interesting.

Lucky

Lucky

Would they use Python enums? Because Python enums are.. interesting.

Yeah, in python I still use

FOO = 1

BAR = 2

stsouko

stsouko

Hello!

class Molecule(db.Entity):

_table_ = (schema, 'molecule')

id = PrimaryKey(int, auto=True)

children = Set('Molecule', reverse='parent', cascade_delete=True)

parent = Optional('Molecule', reverse='children')

merge_source = Set('Molecule', reverse='merge_target') # molecules where self is more correct

merge_target = Set('Molecule', reverse='merge_source') # links to correct molecules

how to set schema for many-to-many table?

stsouko

stsouko

I try to implement DB for storing chemical reactions and molecules with versioning of structure

Alexander

Alexander

You can specify 'table' option for any Set attribute of many-to-many relationship:

merge_source = Set('Molecule', table=['schema_name', 'table_name'])

Alexey

Alexey

Still missing size=64 for ints, and sql_default='NOW()' for datetimes

Try now @luckydonald

size for ints and sql_default options have been added

Lucky

Lucky

If I set sql_default="NOW()" will the datetime field be loaded after creation?

Somehow how I imagine auto=True [0] to work.

The volatile=True [1] allows the database to be changed outside of pony, but does it mean, this is less efficient, because it need to check that field every time I used it (read/write)?

[0] https://docs.ponyorm.com/api_reference.html?highlight=size#cmdoption-arg-auto

[1] https://docs.ponyorm.com/api_reference.html?highlight=size#cmdoption-arg-volatile

Lucky

Lucky

How do I put text[] type in ORM?

http://www.monkeyandcrow.com/blog/tagging_with_active_record_and_postgres/

https://www.postgresql.org/docs/9.6/static/arrays.html

Alexander

Alexander

If I set sql_default="NOW()" will the datetime field be loaded after creation?

Somehow how I imagine auto=True [0] to work.

The volatile=True [1] allows the database to be changed outside of pony, but does it mean, this is less efficient, because it need to check that field every time I used it (read/write)?

[0] https://docs.ponyorm.com/api_reference.html?highlight=size#cmdoption-arg-auto

[1] https://docs.ponyorm.com/api_reference.html?highlight=size#cmdoption-arg-volatile

Volatile attribute values are cached too, but when object is inserted or updated Pony forget values of volatile attributes and should re-read them if the attribute value requested in the same db_session after insert/update. It is a bit less efficient then auto=True, but if you dont't change object too often, the difference should not be too big. For datetme attribute it is possbile to use Python datetime.now default value instead of NOW() SQL function, but it has some limitations. If you use Python's datetime.now default, it is better to store time in UTC timezone.

Alexander

Alexander

How do I put text[] type in ORM?

http://www.monkeyandcrow.com/blog/tagging_with_active_record_and_postgres/

https://www.postgresql.org/docs/9.6/static/arrays.html

Right now Pony does not support PostgreSQL arrays, but in many cases you can use JSON arrays instead, which are supported by Pony.

Lucky

Lucky

Bummer.

Are json lists as performant?

Trying to improve my tagging system, as it has become to slow for answering telegram inline queries in time...

I wanted to try out

http://www.monkeyandcrow.com/blog/tagging_with_active_record_and_postgres/

Alexander

Alexander

I think json lists should be almost as performat as arrays. But in my opinion, neither json lists nor arrays will improve the performance of your query, because it is inherently slow, especially if you want to use in instead of startswith for tag name search.

If I'd write such an application, I'd search tags in Python, and then pass found list of tag's ids to the database. To do that, I'd write a python function which accept a substing and return a set of tag ids which names contain that substring:

tag_cache = defaultdict(set) # substring -> set(tag_id)

@db_session

def populate_tag_cache():

for tag in Tag.select():

string = tag.string.lower()

for i in range(0, len(string)):

for j in range(i+1, len(string)):

substing = string[i:j]

tag_cache[substring].add(tag.id)

def find_tags(substring):

return tag_cache[substring.lower()]

def find_stickers(query_text):

query_text = query_text.lower()

tag_ids = find_tags(query_text) - find_text("nsfw")

pairs = orm.select(

(st.sticker.file_id, max(st.date))

for st in StickerMessage

if (

orm.exists(s_t for s_t in st.sticker.tags if s_t.id in tag_ids) or

orm.exists(p_t for p_t in st.sticker.sticker_pack.tags if p_t.d in tag_ids)

)

)

This is not a complete code (I have no time to help you write entire application), but just a rough idea. I think that for real-time search you should use pre-calculated cache sitting in Python memory and use tha cache to calculate list of tags without hitting the database.

But you will need to update cache manually when you add new tags. This complicates architecture, but improves performance

Lucky

Lucky

Rozen

Rozen

thank you

XZ x n8 v b vcvheeec 8. e bcxvbb c D u c. out boxeo rcio st 6 y. dcbc xS x 8 bcxD 3 za x x x vi cbc 6 xc 68 b 5, Z tey Unix c. 0 8 6.

Lucky

Lucky

I think json lists should be almost as performat as arrays. But in my opinion, neither json lists nor arrays will improve the performance of your query, because it is inherently slow, especially if you want to use in instead of startswith for tag name search.

If I'd write such an application, I'd search tags in Python, and then pass found list of tag's ids to the database. To do that, I'd write a python function which accept a substing and return a set of tag ids which names contain that substring:

tag_cache = defaultdict(set) # substring -> set(tag_id)

@db_session

def populate_tag_cache():

for tag in Tag.select():

string = tag.string.lower()

for i in range(0, len(string)):

for j in range(i+1, len(string)):

substing = string[i:j]

tag_cache[substring].add(tag.id)

def find_tags(substring):

return tag_cache[substring.lower()]

def find_stickers(query_text):

query_text = query_text.lower()

tag_ids = find_tags(query_text) - find_text("nsfw")

pairs = orm.select(

(st.sticker.file_id, max(st.date))

for st in StickerMessage

if (

orm.exists(s_t for s_t in st.sticker.tags if s_t.id in tag_ids) or

orm.exists(p_t for p_t in st.sticker.sticker_pack.tags if p_t.d in tag_ids)

)

)

This is not a complete code (I have no time to help you write entire application), but just a rough idea. I think that for real-time search you should use pre-calculated cache sitting in Python memory and use tha cache to calculate list of tags without hitting the database.

But you will need to update cache manually when you add new tags. This complicates architecture, but improves performance

Had a thought about that.

Currently I already have `1331` unique tags, not sure if that could hit memory very fast.

I had a talk with a guy maintaining a big image board, where tagging and complex queries are a big thing.

He said, he copies relevant tag data into elastic search and use its power of full text search and bool filtering.

As soon as a tag is change it is notified to a redis based task scheduler and processed in background every 2 seconds

Micaiah

Micaiah

I want to be able to send blog posts to my site from a little PyQt app that I wrote. What would be the safest way to confirm that I sent the post?

Micaiah

Micaiah

Right now I'm thinking about encrypting my secret key and sending it with the json packet which gets turned into a post, but I don't know enough about crypto to know if thats a good idea.

Lucky

Lucky

I know, this is probably the wrong group, but you guys know python so well :D

Anyone knows a python substitution for sidekiq/redis ("simple background processing for Ruby")?

I came along RQ ("Redis Queue")

http://python-rq.org/ (via https://devcenter.heroku.com/articles/python-rq )

Romain

Romain

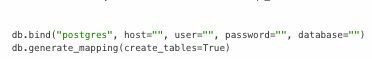

Hello everyone. Any direction to create a postgres database if it doesn't exist? (from within pony)

Alexey

Alexey

Hello everyone. Any direction to create a postgres database if it doesn't exist? (from within pony)

Hi Romain, welcome

Currently there is no way to create a postgres database from Pony - you only can create tables and indexes if they don't exist. The database itself should be created before it can be used in Pony.

Alexander

Alexander

The migration tool is almost ready. I really hope we can release before the end of this week. But I want to be sure it does not contain bugs, so we don't receive a flood of messages "the migration tool broke my tables"

Micaiah

Micaiah

Marchellos

Marchellos

Hi everyone!

I've started using Pony yesterday and haven't actually understood how to use joins yet. In examples I saw when left_join() was used with two for clauses, but when I try to repeat it in my code, I get error like "collection was expected, got "for p in Pond""

Maybe somebody could explain how to use it or point me to docs page where it's already explained?

Alexander

Alexander

Hi Mark! That's a great question, and I'm going to give explanation now. But could you give me a favor? Ask this question at http://stackoverflow.com/questions/tagged/ponyorm, so this answer would be available for all people who will look for it. Thanks

Marchellos

Marchellos

Alexander

Alexander

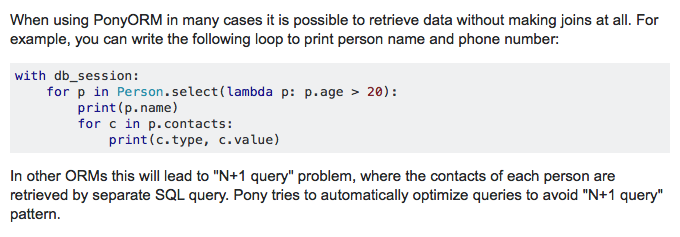

The first query is to retrieve persons, the second one is to retrieve contacts of the first person, and the third one is to retrieve contacts of all other loaded persons

Marchellos

Marchellos

Ok, cool. And is there a way to write a query similar to this:

SELECT *

FROM "public"."person" "p"

LEFT JOIN "public"."contacts" "c"

ON "p"."id" = "c"."person"

Alexander

Alexander

No, Pony does not support such queries, because they are less efficient. In query like this, the same person data will be appeared multiple times, and need to be processed in Python again and again during data retrieving. In most cases it is better to split this query to two where each retrieve data without any unnecessary duplication

Alexander

Alexander

Sure! If you think that StackOverflow answer is on-point, then please mark it as accepted )

Ngalim

Ngalim

Cruor99

Cruor99

Claudio

Claudio